Advising with Intelligence: Aligning AI in Academic Advising with UC Principles and the Prosci AI Integration Framework

Where AI Belongs—and Where It Doesn’t—in Academic Advising

Introduction

Advising is where policy meets life—where a single conversation can shift a student’s trajectory, and where the wrong automation can quietly erode trust.

As artificial intelligence (AI) becomes increasingly embedded in higher education, the core question is no longer “Can we automate this?”—but:

“Should we?”

Should we use AI to deliver an academic alert?

To recommend a course load?

To engage a student in crisis?

The answer depends not just on what AI can do—but on what it should do. This article offers a values-driven framework to guide that discernment. By evaluating advising tasks through both operational utility and ethical integrity, institutions can integrate AI in ways that enhance—not diminish—the relational heart of student support.

Academic advisers play a pivotal role in shaping student success. They are not just information providers—they are connectors, mentors, and advocates. Advising is the campus function where the institutional mission meets lived student experience. That’s what makes it the perfect archetype for testing AI integration.

In this piece, I focus on academic advising to show how we can align AI with our highest responsibilities:

To support student growth holistically—academically, personally, and professionally

To uphold human dignity, cultural sensitivity, and developmental care

To strengthen—not replace—the human relationships at the heart of learning

We do this by applying two complementary frameworks:

The Prosci AI Integration Framework, which helps classify tasks as:

Human-Exclusive – work that must remain human-led

With Me – work best performed collaboratively with AI

For Me – work that AI can handle independently

The University of California’s AI Principles, which serve as an ethical backbone, emphasizing:

Appropriateness

Transparency

Fairness

Human Values

Privacy

Shared Benefit

Accuracy and Safety

Accountability

Together, these frameworks allow us to implement AI in a way that is strategic, humane, and deeply student-centered.

Core Job Duties of an Academic Adviser

Academic advising is complex, relational, and multi-dimensional. It blends coaching, compliance, cultural awareness, data interpretation, and institutional navigation. These eight core responsibilities represent the breadth of adviser impact—and offer a lens to assess where AI may enhance or endanger their mission.

1. Provide Individual Academic Advising

Advisers meet one-on-one with students to:

Interpret degree requirements, GE patterns, and program pathways

Explore majors and minors aligned with student goals

Navigate academic recovery (e.g., probation, reinstatement)

Support personal and identity-based challenges that impact progress

🔍 AI Insight: This task is high in human complexity. Advising bots may support prep work, but the live conversation requires emotional and cultural fluency.

2. Monitor Academic Progress

Advisers analyze:

Transcripts, GPA trends, and degree audits

Registration patterns and milestones

Holds and academic standing flags

🔍 AI Insight: Ideal for “With Me” AI use—surfacing risks and suggesting interventions, while humans contextualize the data.

3. Support Student Success and Intervention

Includes:

Connecting students with tutoring, basic needs, disability services

Navigating life disruptions (illness, housing, caregiving)

Advocating for policy flexibility

🔍 AI Insight: Risk of harm if automated poorly. Requires trust, equity lens, and context. Use AI only to support—not initiate—interventions.

4. Facilitate Orientation and Onboarding

Advisers:

Explain curriculum and enrollment at orientation

Conduct skill-building workshops

Integrate academic messaging with student affairs, faculty, housing

🔍 AI Insight: Some delivery can be AI-augmented (e.g., chatbots for FAQs), but connection and reassurance still demand human presence.

5. Manage Advising Records and Notes

Tasks include:

Documenting interactions in CRMs or SIS

Updating plans and follow-ups

Ensuring FERPA compliance

🔍 AI Insight: Strong “For Me” candidate. Automate tagging, formatting, and summarizing—while humans retain discretion over sensitive entries.

6. Conduct Student Outreach

Outreach types:

Proactive (e.g., low GPA, undeclared, transfer)

Reactive (missed appointments, risk flags)

Campaigns (graduation prep, registration nudges)

🔍 AI Insight: Great for AI-assisted content creation and segmentation. Human tone and timing must still guide the effort.

7. Collaborate with Faculty and Academic Units

Includes:

Clarifying curriculum pathways

Discussing student needs with instructors

Helping resolve enrollment bottlenecks

🔍 AI Insight: AI can support data prep and bottleneck identification—but human diplomacy remains essential.

8. Contribute to Assessment and Data Review

Responsibilities:

Input into advising-related learning outcomes

Support for student success metrics (retention, GPA, etc.)

Participation in accreditation

🔍 AI Insight: This is fertile ground for AI to automate dashboards, generate insights, and detect patterns—under human interpretation.

Section 3: Using the Prosci AI Integration Framework to Differentiate Human-Exclusive, Augmented, and Automatable Tasks

Using the Prosci AI Integration Framework to Differentiate Human-Exclusive, Augmented, and Automatable Tasks

As institutions integrate AI into advising, the first step is not technical—it’s strategic triage.

What work belongs to humans, what can be enhanced by AI, and what can be automated entirely?

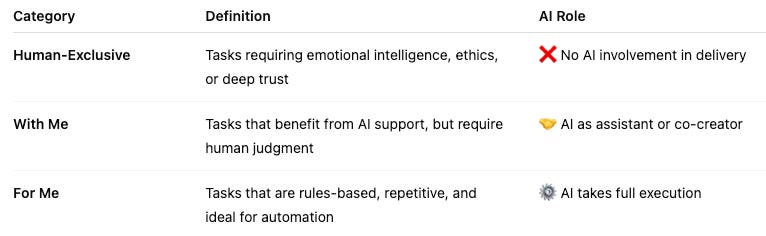

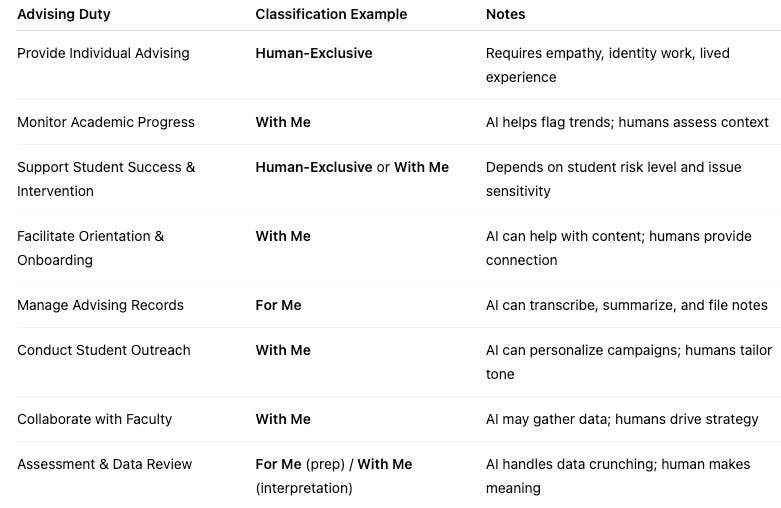

The Prosci AI Integration Framework helps answer that question. It categorizes tasks across three levels of AI suitability:

We now apply this framework to the eight core advising duties:

Advising AI Use Cases with Human Safeguards

Monitor Academic Progress

AI can generate early alerts from real-time SIS data and surface risk patterns.

But only humans can interpret whether a low GPA is due to grief, burnout, or systemic exclusion—and respond appropriately.

Conduct Student Outreach

AI can segment students by risk level, personalize outreach messages, and schedule nudges based on registration timelines.

But only humans can sense when a message might retraumatize, misfire culturally, or land as impersonal noise—especially for students already disengaged or distrustful of the institution.

Support Student Success and Intervention

AI can suggest referrals based on keyword analysis in notes or detect behavioral risk signals from platform usage.

But only humans can discern if a student’s silence signals overwhelm, stigma, or systemic harm—and offer compassion, not just compliance.

🎯 How to Use This Framework

Use this matrix in AI governance meetings, advising strategy sessions, or campus planning workshops.

For each duty, ask:

What value does human judgment add?

Where can AI increase capacity without reducing care?

What risks arise from over-automation?

Section 4: Anchoring Advising AI Use in UC Principles and the Prosci Integration Framework

Ethical clarity must precede technical implementation.

Even the best AI tools can harm students if applied without regard for human dignity, trust, and equity. That’s why responsible advising AI must be evaluated through two complementary lenses:

The UC AI Principles, which define what responsible, fair, and human-centered AI use looks like across the institution

The Prosci AI Integration Framework, which helps classify tasks based on their suitability for automation or augmentation

Together, these models allow institutions to answer two critical questions:

Should we apply AI here? (UC Principles)

If so, how? (Prosci Task Fit)

This section breaks down how each of the 8 UC AI Principles applies directly to academic advising and AI.

UC AI Principle 1: Appropriateness

AI should not be used just because it's possible.

In advising, this means asking:

Does this task require cultural sensitivity or emotional judgment?

Would automation harm student trust or well-being?

🛑 Red flag: Automatically emailing dismissal notices may be “efficient,” but ethically inappropriate.

UC AI Principle 2: Transparency

Students and staff must know when AI is involved.

Is the student aware their recommendation was AI-generated?

Can advisers explain how decisions were made?

📣 Tip: Flag AI-assisted actions in student dashboards or messages—“Generated with AI Assistance.”

UC AI Principle 3: Accuracy, Reliability, and Safety

AI systems must work correctly and safely, especially in high-stakes areas.

Do course suggestions follow catalog logic?

Are risk flags updated in real time?

Is financial aid information verified?

🚨 Impact: A flawed algorithm can derail degree plans or funding eligibility.

UC AI Principle 4: Fairness and Non-Discrimination

AI should not widen equity gaps.

Are students of color flagged more often for “risk”?

Are alternative paths (e.g., part-time, transfers) treated as “lesser”?

Is there bias in which students get nudged?

⚖️ Action: Audit AI tools for disparate impact across race, disability, first-gen status, and more.

UC AI Principle 5: Privacy and Security

Advising data is sensitive and protected.

Are generative tools connected to open web APIs?

Is FERPA-protected data kept internal and encrypted?

Can AI infer personal circumstances from indirect data?

🔒 Reminder: Academic struggles, mental health issues, and financial hardship are not just datapoints—they’re deeply human disclosures.

UC AI Principle 6: Human Values

AI must uphold dignity, agency, and relationship.

Does the student feel seen or sorted?

Is the adviser still present in the process?

Are we building trust or simply routing tasks?

❤️ Litmus Test: Would this tool strengthen my relationship with the student—or replace it?

UC AI Principle 7: Shared Benefit and Prosperity

AI should uplift all students—not just those who are easy to serve or tech-savvy.

Are multilingual or disabled students able to access the same insights?

Are first-gen students receiving relevant nudges?

📈 Design Principle: Equity must be baked in—not retrofitted.

UC AI Principle 8: Accountability

Institutions must remain responsible for AI decisions and errors.

Can students challenge an AI recommendation?

Do staff know how to report harm?

Are systems regularly audited?

🧭 Rule: Every AI-influenced action should be reviewable—and reversible—by a human.

Closing Insight

Ethical AI in advising is not a compliance checkbox—it is a governance obligation. These principles must be embedded in procurement, implementation, training, and evaluation. When applied well, they protect not only our students but also the soul of the advising profession.

Section 5: Situating Advising in the Campus AI Ecosystem

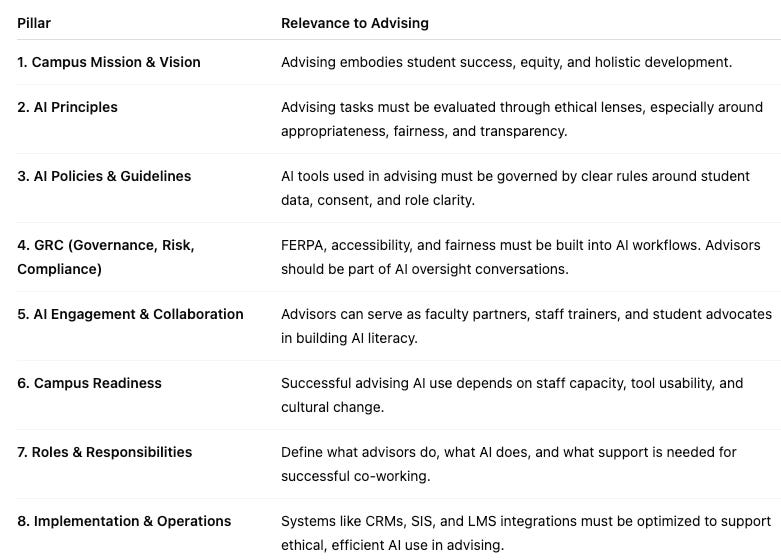

To ensure AI use in academic advising is not fragmented or reactive, it must be embedded within a broader, mission-aligned AI strategy. That’s the purpose of the Campus AI Framework—a system-wide structure that guides responsible adoption, use, and governance of AI across the institution.

Advising is not peripheral to this framework. It is a frontline test case for whether we can implement AI in a way that scales without losing trust, care, or equity.

Advising and the Campus AI Framework

The Campus AI Framework rests on eight pillars. Advising intersects with many of them:

Advising Within the Teaching & Learning Domain

Within the Campus AI Framework’s broader architecture, academic advising lives in the Teaching & Learning Domain—but its reach extends far beyond scheduling and degree progress.

Advisers are educators, sensemakers, and system navigators. AI that supports them must be held to that same interdisciplinary, high-impact standard.

AI in advising touches:

Orientation and onboarding

Retention and success analytics

Equity interventions

LMS pathways and progress nudges

Student development and self-authorship

In this sense, advising is a mirror and model for how AI can serve human flourishing—if designed with care.

Anchoring in ERAT: Ethical, Responsible, Accountable, Trustworthy

All AI use in advising must pass the ERAT test:

Ethical – Does this respect the dignity and agency of every student?

Responsible – Is there oversight, training, and policy alignment?

Accountable – Can errors be traced, explained, and corrected?

Trustworthy – Will students and staff feel safe using it?

ERAT is not a filter to apply after AI is deployed—it is a foundation to build from the very beginning.

Institutional Imperative

Advising is one of the most emotionally complex, legally sensitive, and equity-critical functions on campus.

That’s why its AI integration must be:

Governed by ethics and transparency

Supported by infrastructure and policy

Shaped by those who understand students best—advisers themselves

Section 6: Conclusion and Final Reflection

Advising is not just an administrative function—it is the emotional, academic, and ethical heartbeat of student success.

It’s where life plans are rewritten. Where institutional policy is translated into personal meaning. Where one conversation can determine whether a student stays, leaves, thrives, or disappears.

And that is precisely why AI must serve advising—never replace it.

In a time of shrinking bandwidth and growing complexity, artificial intelligence offers powerful possibilities:

To offload the routine

To amplify the essential

To extend the reach of care

But only if guided by values.

Protect. Partner. Perform.

This article offered three tools to help you implement AI intentionally in advising:

Protect: Use the UC AI Principles to safeguard dignity, trust, and equity.

Partner: Use the Prosci Integration Framework to determine where AI can assist—without replacing humanity.

Perform: Embed AI within your Campus AI Framework to ensure responsible scaling, alignment, and oversight.

These are not just tools for advising—they are templates for ethical institutional transformation.

The Risk of Getting It Wrong

If we treat advising as a task to optimize rather than a relationship to uphold, we risk:

Widening equity gaps

Eroding student trust

Undermining developmental learning

AI must not become a shortcut to scale. It must be a commitment to deepen impact.

Final Thought

The goal is not to serve more students with less humanity—

It is to serve every student with greater intentionality,

supported by tools that reflect our highest values,

not just our fastest capabilities.

Let advising lead the way.

Not just in how we adopt AI—but in how we remain human, together.

References and Other Relevant Academic Advising Sources

Have thoughts or want to collaborate? Email me at joepsabado@gmail.com, connect with me on LinkedIn, or visitCampusAIExchange.com for more resources on responsible AI adoption in higher education.

Note: The perspectives shared are personal and do not reflect official positions of my employer.